Criminals are weaponising artificial intelligence (AI) to create millions of fake bank accounts, used in money laundering, sanctions evasion and fraud. US financial institutions are most exposed to this fake ID crimewave.

How banks’ ID checks work

Banks are legally required to verify the identity, suitability and risks of their clients. Get this “know-your-customer” (KYC) process wrong and the bank’s managers can be prosecuted for enabling money laundering, fraud and terrorism financing.

However, financial firms try to make mandatory KYC as streamlined as possible. Most of the services that enable financial institutions to check their clients’ identities (IDs) are automated and don’t need any manual review. Which neo-bank or fintech doesn’t like lots of new clients to show its funders how quickly it’s growing?

From account reset to account opening frauds

Some modern payments frauds hit the headlines. We read about spectacular scams where digital criminals exploit weaknesses in banks’ and fintech firms’ account reset processes.

A team of fraudsters may lure a victim via a phishing message, then use psychological tricks and technical knowledge to circumvent the bank’s security checks, substitute their own (fake) ID for that of the client and then to empty the victim’s bank account.

But this is labour-intensive and requires specialist knowledge of banks’ KYC procedures. Increasingly, criminals are turning to a much more mundane and harder-to-combat fraud: opening new business or personal bank accounts using fake or stolen IDs.

In bulk, newly opened fake accounts are worth money and traded like a commodity. They can be resold to other criminals for use in money laundering operations, fraudulent credit applications and other payments frauds.

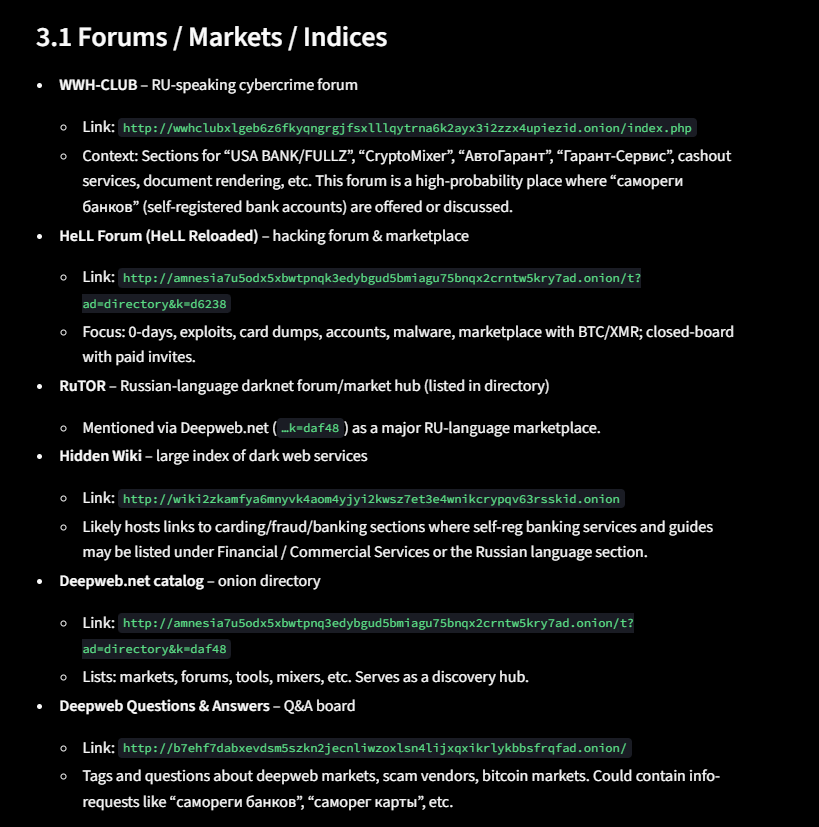

Selection of dark web forums advertising “self-registered” (i.e. fake) bank accounts for sale (generated using Robin, a large language model)

And artificial intelligence has made scammers’ lives a whole lot easier. To open fake accounts, fraudsters can generate new faces and the associated ID documents in large volumes, using services like Onlyfake (which pumps out incredibly realistic images of fake IDs using artificial intelligence). There’s also a lot of attention amongst hackers to so-called face morphing attacks, camera swap software and so on.

How banks try to stop fake account openings

The digital KYC business is so large that it’s in the hands of specialist software vendors, who sell their solutions to banks and fintechs. When you open a new account with a banking app using a scan of your passport and a selfie, it’s the KYC vendor that’s checking your ID.

But technical and professional standards among KYC vendors vary widely. Some still struggle to deal with the injection of pre-made photos and documents into the KYC process.

Injection attacks are a sophisticated fraud where criminals insert fake or manipulated digital media (like deepfakes, pre-recorded videos or synthetic images) directly into an organization’s KYC system to bypass security checks and impersonate legitimate users

The vulnerability to these attacks has become such a pain point that the industry has decided to make a standard to help catch intruders.

Up to now, however, certification under this standard has been mainly about ensuring even responses across different race and age groups. The new standard also aims to ensure resilience against presentation attacks, used to spoof a biometric face authentication system during the onboarding process, enabling the creation of a fraudulent account.

The guidelines for presentation attacks cover how to detect and act against somebody projecting a photo of a photo on a monitor, or against anyone wearing a mask. Amazingly, there are still vendors who miss these attacks too!

But there has been very little guidance on how to make banks “liveness” checks robust – and this is where AI deepfakes are really dangerous.

Why US financial institutions are so vulnerable

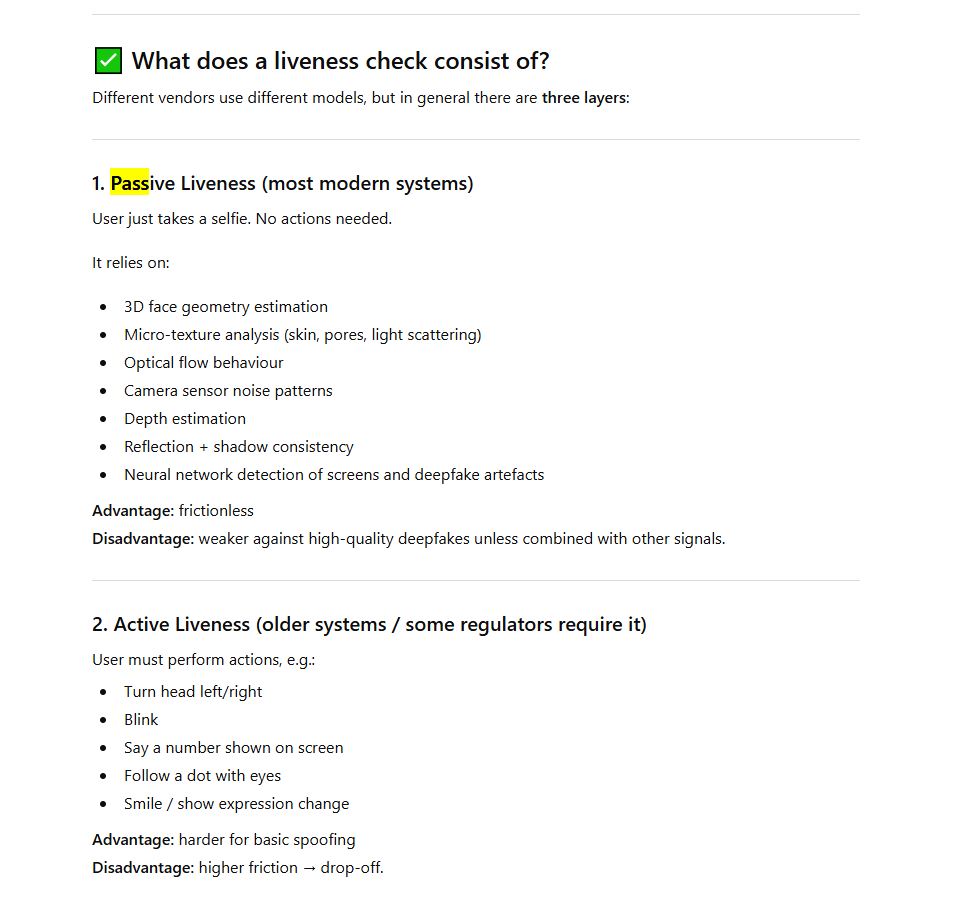

The liveness check is there to make sure you are…well, alive and the person you are claiming to be. To detect liveness, the KYC software running on your phone may ask you to blink, smile or move your head back and forth.

The US, the world’s largest financial market by a huge margin, doesn’t do its liveness checks very well. The reason is that the country’s banks are very hesitant to disrupt their users’ experience with the type of friction a twitch of your eyebrows may represent during the account opening process.

In fact, most US banks require only photo verification (instead of a dynamic video) to open an account. For debit card applications, few banks ask for a proof of address (via a bill or statement showing that address). For credit cards, US banks usually run a background address check as a part of their credit checks.

US banks’ systems then typically rely on so-called “passive” liveness checks, involving a scan of facial geometry, skin analysis, depth estimation and camera sensor noise patterns.

Because it requires a user to perform some action, an active liveness check makes it harder for a criminal to spoof a digital identity. However, it creates customer friction and takes time.

Each KYC vendor has its own approach: some extract and store signals on a client and pass them to the server with a photo, others send snapshots continuously to prove liveness–the options are countless!

Passive and active liveness checks (summary from ChatGPT)

What should banks do?

So what should financial institutions (and the software vendors supplying them) do?

To fight criminals at scale, they need to implement a multi-level approach, instead of relying on individual services without guaranteed results.

For example, they should run credit checks and mobile network operator (MNO) checks (MNOs provide age-verified data collected during the mobile contract set-up process). They should cross-examine digital identities at other institutions, and they should not only verify barcodes on documents, but also keep a track of used documents, as well as relying on allow- and deny- lists. Together, these checks are a powerful defensive method against fake IDs.

And, of course, they should use software that really requires liveness. That’s easier said than done in an era of AI-produced deepfakes, which have been a godsend to criminals. Before your financial institution gets hit, make sure your identity verification systems are as robust as they can be.